top of page

Meta Reality Labs Multimodal Interaction

AR/VR Product Design / HCI

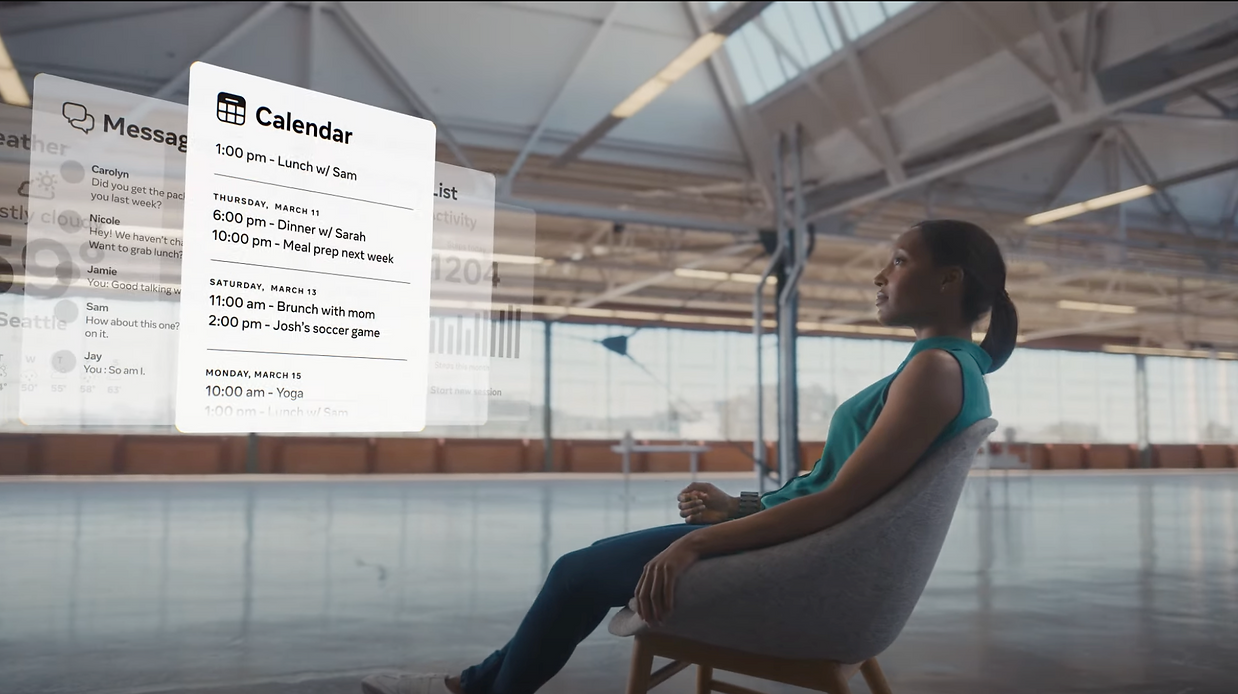

At Meta Reality Labs, I work on defining what AI hardwares and XR interaction will look like in the next 3–5 years. As a lead product designer, I collaborate with scientists, engineers, and researchers to turn emerging technologies into clear interaction visions and meaningful user experiences.

AI Voice Interface for Quest Horizon OS

Selected Published Works

I led the end-to-end design of AI voice interaction for Meta Quest, architecting the system to support a conversational AI interface. Key projects including:

-

User barge-in and follow-up logic

-

Camera-based intent inference

-

Audio concurrency

-

Visual and earcon feedbacks

Starting from Horizon OS V85, users can navigate Quest hands-free, perform all OS-level input primitives with voice and head cursor.

bottom of page